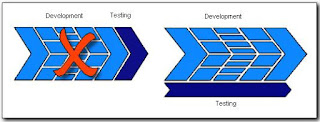

In order to make the testing process as effective as possible it needs to be viewed as one with the development process (Fig 2.1.). In many organizations, testing is normally an ad-hoc process being performed in the last stage of a project, if performed at all.

Figure 2.1. Testing should be conducted throughout the development process.

In order to reduce testing costs, a structured and well defined way of testing needs to be implemented.

Certain projects may appear too small to justify extensive testing. However, one should consider the impact of errors and not the size of the project.

Important to remember is that, unfortunately, testing only shows the presence of errors, not the absence of them.

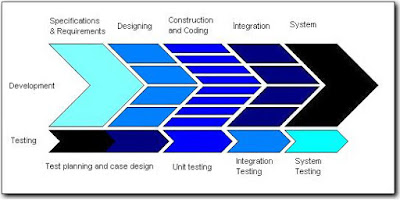

The figure below (Fig 2.2.) gives a general explanation about both the development process and the testing process but it also shows the relation between these two processes. In real life these two processes, as stated earlier, should be viewed and handled as one. The colors in the figure are to show relations between phases. For example, system testing of an application is to check so that it meets the requirements specified at the start of the development. A large part of integration testing is to check the logical design done in the design phase of the development. The relationships between the phases are based partly on the V-model as presented by Mark Fewster and Dorothy Graham (Software Test Automation, 1999).

Figure 2.2. Test phases relations with Development phases

As mentioned above, a well defined and understood way of testing is essential to make the process of testing as effective as possible. In order to produce software products with high quality one has to view the testing process as a planned and systematic way of performing activities. The activities included are Test Management, Test Planning, Test Case Design & Implementation and Test Execution & Evaluation. Test management will not be further discussed in this report.

1.1 Test planning

As for test planning, the purpose is to plan and organize resources and information as well as describing the objectives, scope and focus for the test effort. The first step is to identify and gather the requirements for the test. In order for the requirements to be of use for the test, they need to be verifiable or measurable. Within test planning an important part is risk analysis. When assigning a certain risk factor, one must examine the likelihood of errors occurring, the effect of the errors and the cost caused by the errors. To make the analysis as exhaustive as possible, each requirement should be reviewed. The purpose of the risk analysis is to identify what is needed to prioritize when performing the test. Risk analysis will be further discussed later in this report.

In order to be able to create a complete test plan, resources needs to be identified and allocated. Resources include

- Human – Who and how many are needed

- Knowledge - What skills are needed

- Time - How much time needs to be asserted

- Economic - What is the estimated cost

- Tools - What kind of tools are needed (hardware, software, platforms, etc.)

A good test plan should also include stop criterias. These can be very intricate to define, since the actual quality of the software is difficult to determine. Some common criterias used are (Rick Hower, 2000):

- Deadlines (release deadlines, testing deadlines, etc.)

- Test cases completed with certain percentage passed

- Test budget depleted

- Coverage of requirements reaches a specified point

- Bug rate falls below a certain level

- Beta or alpha testing period ends

Since every error in today’s complex software very rarely is found, one can go on testing forever if stop criterias aren’t used. Specific criterias should therefore be defined for each separate test case in the process.

The outcome of test planning should of course be the test plan, which will function as the backbone, providing the strategies to be used throughout the test process.

2 Test case design & implementation

The main objective with test case design is to identify the different test cases or scenarios for every software build. These cases shall describe the test procedures and how to perform the test in order to reach the goal of each case. For each test case, the particular conditions for that test shall be described as well as the specific data needed and the supposed result. If there have been testing done on the subject before, the use of old cases becomes important.

The design of test cases is based on what is to be tested. Features to be tested often present a unique need and the testing should be done in small sections to cope with the differences in test case design that occur due to this. When testing a single feature there are a number of things to consider; how does it work, what may cause it to crash, what are the possible variables?

Both data input and user actions should be done in ways that test the designed logic so that we get answers to the questions; do we get the expected answer, what happens when wrong input is used? If, for instance, you are prompted to write your age in a field, the logic behind this may expect a number between 0 and 100, but of course you might by mistake put a letter instead. What happens then? If the application is not designed to see this mistake it will cause the application to crash or at least give an unexpected answer. This makes it important to test this feature so that it does not accept the wrong input but still, of course, accepts the expected input.

Considering the many ways to make similar mistakes there are thousands of different inputs that should be tested. It is not possible to test all these potential inputs, instead one should choose input that in a good way represent all possible groups of input. This procedure is often referred to as boundary testing.

For the results of the test to be useful and to know when stop criterias are reached, the completeness of the test cases is very important.

When creating tests based on the test cases, certain objectives should be addressed.

- Make your tests as reusable as possible

- Make your tests easy to maintain

- Use existing tests when possible

3 Test execution & evaluation

When running your tests, the results have to be taken care of in a defined manner. Was the test completed or did it halt. Are the results of the test the expected ones and how can it be verified that the results originate from a correct run of a test.

When the actual results from a test do not match the expected, certain actions have to be taken. The first is to determine why the actual and the expected results differ. Does the error lie in the tested application or e.g. the test script?

When errors are found, they need to be properly reported. Information on the bug needs to be communicated so that developers and programmers can solve the problem. These reports should include, among others, application name and version, test date, tester’s name and description on the occurred error.

When a bug has been properly taken care of, the software needs to be re-tested to verify that the problems have been solved, and that the corrections made did not create new conflicts.

During the process of development and testing of software, many changes will surely be made to the software as well as its environments. When such changes are made, there will be a need to assure that the program still functions as required. This kind of testing is called regression testing. The difference between a re-test and regression test is that the latter is done when changes has been made to the program regarding, for example, its functionality, whereas re-test is done to test the software after bug fixing.

4 Test phases

As the testing process should be viewed as parallel with the development, it will go through certain phases. When the development moves forward the scope and targets for testing changes, starting with each piece and ends with the complete system. The goal is that every part is tested and fully functioning before being integrated with other parts. Important in the development process is that neither of these phases is completely separated from each other. There is no definite border between when the different phases starts or ends. They can be seen as an overall approximate guideline for how to perform a successful test. Several authors, including Bill Hetzel and Hans Schaefer, describe the test process as consisting of the following phases:

- Unit testing

- Integration testing

- System testing

- Acceptance testing

Unit testing

Also called module test. The testing done on this stage is on the isolated unit.

Integration testing

When units interact with others, one must assure that the communication between them works.

Conflicts often occur when units are developed separately or if the syntax to be used is not communicated in a sufficient way.

System testing

When the system is complete, testing on the system as a whole can commence. Test cases with actual user behaviour can be implemented and non-functional tests, such as usability and performance, may be made.

Acceptance testing

The purpose is to let end users or customers decide whether to accept the system or not. Are the users feeling comfortable with the product and does it perform the activities as required?

5 Test types

The previous text describes the general guidelines for testing, whether it is software applications or web applications. But as the title of this report implies, the scope is centred on how to perform successful testing on web applications and how this process differs from the general test process.

In order to proceed to this area certain issues have to be addressed first. One must be acquainted with the different types of tests that are performed within the different stages throughout the process. The text that follows describes, in short, the most commonly used terms for test types used, with aim on the medium of the web.

There are no definite borders between the types and several of them can seem overlapping with adjacent areas. Needless to say, there are several opinions on this matter and we base the following descriptions on authors such as Hans Schaefer, Bill Hetzel, Tim Van Tongeren and Hung Q. Nguyen.

Functionality testing

The purpose of this type of test is to ensure that every function is working according to the specifications. Functions apply to a complete system as well as a separated unit. Within the context of the web, functionality testing can, for example, include testing links, forms, Java Applets or ActiveX applications.

Performance testing

To ensure that the system has the capability that is requested the performance have to be tested for. The characteristics normally measured are execution time, response time etc. In order to identify bottlenecks, the system or application have to be tested under various conditions. Varying the number of users and what the users are doing helps identify weak areas that are not shown during normal use. When testing applications for the web this kind of testing becomes very important. Since the users are not normally known and the number of users can vary dramatically, web applications have to be tested thoroughly. The general way of performance testing should not vary, but the importance of this kind of test varies (Schaefer, 2000). Testing web applications for extreme conditions is done by load- and stress testing. These two are performed to ensure that the application can withstand, for example, a large amount of users simultaneously or large amount of data from each user. Other characteristics of the web that is important can be download time, network speed etc.

Usability

To ensure that the product will be accepted on the market it has to appeal to users. There are several ways to measure usability and user response. For the web, this is often very important due to the users low acceptance level for glitches in the interface or navigation. Due to the nearly complete lack of standards for web layout this area is dependent on actual usage of the site to receive as useful information as possible. Microsoft has extensive standards down to pixel level on where, for example, buttons are to be placed when designing programs for windows. The situation on the web is exceptionally different with almost no standards at all on how a site layout should be designed.

Compatibility testing

This refers to different settings or configuration of, for example, client machine, server or external databases. When looking at web this can be a very intricate area to test due to the total lack of control over the client machine configuration or an external database. Will your site be compatible with different browser versions, operating systems or external interfaces? Testing every combination is normally not possible, so identifying the most likely used combinations is usually how it’s done.

Security testing

In order to persuade customers to use Internet banking services or shop over the web, security must be high enough. One must feel safe when posting personal information on a site in order to use it. Typical areas to test are directory setup, SSL, logins, firewalls and logfiles.

Security is an area of great importance as well as great extent, not least for the web. A lot of literature has been written on this subject and more will come. Due to the complexity and size of this particular subject, we will not cover this area more than the basic features and where one should put in extra effort.

Big thumbs up for making such a wonderful blog with great content.

ReplyDeleteSoftware Testing courses in chennai

software testing course in chennai

Your website is very cool and it is a wonderful inspiring article. thank you so much.

ReplyDeleteSelenium Training in Chennai

Selenium Training

The article is so informative. This is more helpful for our

ReplyDeletesoftware testing class

selenium training in chennai

software testing course in chennai with placement

magento training course in chennai

Thanks for sharing.